Physical AI en de Opkomst van Gegeneraliseerde Edge Intelligence

Avneesh Agrawal, CEO & Founder, Netradyne

David Julian, CTO & Founder, Netradyne

Jarenlang werd AI-vooruitgang gemeten in benchmarks, demo's en cloud-gebaseerde intelligentie. Maar de meest consequente verschuiving in AI vindt plaats buiten het datacenter—in de fysieke wereld.

Wij noemen dit Physical AI: intelligentie die de echte wereld waarneemt, in real-time redeneert en snel genoeg handelt om ertoe te doen.

Physical AI gaat niet over post-event analyse. Het gaat over het nemen van split-second beslissingen in dynamische, kritieke omgevingen—op de weg, over industriële locaties, overal waar mensen, voertuigen en assets interacteren.

Waarom Physical AI Fundamenteel Anders Is

In fysieke omgevingen zijn latency, betrouwbaarheid en precisie geen theoretische zorgen—ze zijn existentieel.

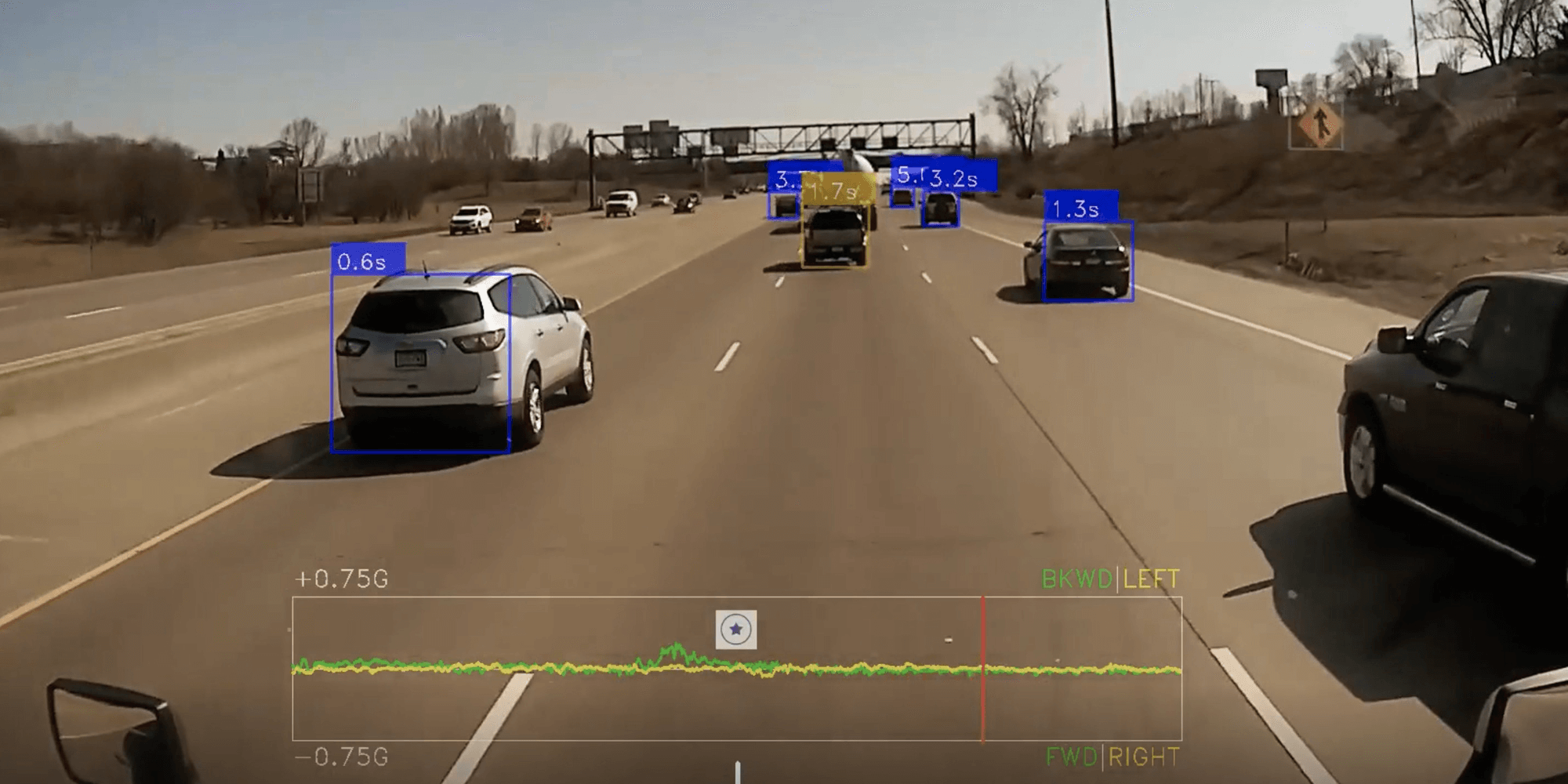

Overweeg een voorwaartse botsingsmelding. Het is niet nuttig als het arriveert nadat de bestuurder al de veilige remafstand heeft uitgeput. Physical AI systemen sluiten de loop op het apparaat: camerabeelden plus sensoren → gefuseerde perceptie → risico-inschatting → hoorbare melding. Allemaal binnen een begrensd latency-budget. In een veiligheidskritieke loop is "een paar seconden later" geen antwoord—het is al te laat.

Echte Physical AI vereist drie moeilijke capaciteiten: real-time redeneren aan de edge, hoge precisie onder ruizige en onvoorspelbare omstandigheden, en bewezen inzet over miljoenen real-world interacties.

De Lange Staart Is Echt

Twee clips kunnen er vergelijkbaar uitzien in een dataset maar zich anders gedragen op de weg. Schittering van een natte voorruit. Gedeeltelijke versluiering door een draaiende vrachtwagen. Een stopbord op een schuin zijweggetje, waar context—niet alleen het bord—bepaalt of stoppen vereist is.

Physical AI moet robuust zijn tegen deze verschuivingen omdat het systeem niet om een schone herkansing kan vragen—het moet toch beslissen.

Robuustheid komt van sensorfusie, temporele modellering over frames, en continu hard-negative mining van echte inzetten. Dit is geen lab-getrainde AI. Het is AI die leert van de lange staart van de fysieke wereld.

Netradyne's Fundament: Physical AI Ingezet op Schaal

Netradyne's werk in Physical AI begon met een echt operationeel probleem: hoe bestuurders te helpen veiligere beslissingen te nemen in de momenten die het meest ertoe doen.

Vandaag wordt Netradyne's Physical AI op schaal ingezet, analyseert 100% van de rijtijd, redeneert direct op voertuigen, en levert onmiddellijke meldingen in de cabine. Dit is productie Physical AI die opereert over miljarden real-world kilometers.

De meeste systemen zien de wereld in momentopnamen—schaarse, drempel-gebaseerde detectie. Physical AI ziet alles: dicht, continu begrip over de gehele rit. Netradyne's LiveSearch maakt edge-systemen doorzoekbaar, on-device intelligentie die 100+ uren per voertuig omspant.

Coaching in de cabine werkt omdat het lokaal is. Een vlootvoertuig dat door dode zones rijdt krijgt nog steeds consistent veiligheidsgedrag omdat inferentie op het apparaat woont.

Van Physical AI naar Gegeneraliseerde Edge Intelligence

Vroege edge AI systemen werden gebouwd om smalle taken op te lossen: detecteer een specifieke gebeurtenis, trigger een vooraf gedefinieerde melding. Gegeneraliseerde Edge Intelligence gaat verder dan taak-specifieke modellen naar systemen die continu hun omgeving begrijpen over mensen, voertuigen, objecten, gedragingen en context.

In plaats van geïsoleerde gebeurtenissen te herkennen, bouwen gegeneraliseerde systemen persistente wereldmodellen aan de edge—vastleggend hoe fysieke omgevingen zich gedragen over tijd. Ze redeneren lokaal en passen intelligentie toe over meerdere use cases zonder nieuwe sensoren te vereisen.

Één Scene, Vele Antwoorden

Een enkel 30-seconden segment bij een kruising kan meerdere uitkomsten aandrijven vanuit dezelfde onderliggende representatie:

- Veiligheid: "Was er een rollende stop?"

- Risicobeoordeling: "Was de volgafstand onveilig gezien snelheid plus regenomstandigheden?"

- Operaties: "Waar clusteren bijna-ongelukken over routes?"

- Training: "Toon bestuurders voorbeelden van correct voorranggedrag."

Gegeneraliseerde Edge Intelligence is wanneer je stopt met het bouwen van een nieuwe pijplijn per vraag—en begint met het bouwen van een continu bijgewerkt, doorzoekbaar model van de wereld.

Intentie Begrijpen, Niet Alleen Aanwezigheid

De volgende grens is contextuele intentie-redenering—begrijpen wat actoren in de fysieke wereld waarschijnlijk gaan doen, niet alleen dat ze bestaan.

Een smal AI systeem geeft generieke meldingen: "voetganger voor" en "fietser gedetecteerd." Gegeneraliseerde Edge Intelligence redeneert over intentie. De voetganger is stilstaand en afgeleid—laag onmiddellijk risico. Maar de fietser's traject suggereert dat ze de baan van de vrachtwagen in zullen gaan om een geparkeerde auto verderop te vermijden. Het systeem geeft haptische feedback of past gasresponse aan om de bestuurder naar een veiligere snelheid te duwen voordat een formele melding nodig is.

Dit is situatiebewustzijn aan de edge. Het is het pad van botsingspreventie naar geavanceerde bestuurderassistentie.

De Inzet Slotgracht

Physical AI is niet alleen een modelcategorie—het is een inzet-slotgracht.

Edge-native systemen stapelen voordelen op over tijd. Elke kilometer voegt zeldzame lange-staart data toe die niet kan worden gerepliceerd in simulatie. Elk apparaat wordt een altijd-aan sensor. Elke verbetering verzendt als software zonder hardware refresh.

Naarmate het platform doorzoekbaar wordt—niet alleen event-triggered—ontstaan nieuwe producten zonder hardware opnieuw te instrumenteren. Edge compute is eindelijk krachtig genoeg voor real-time inferentie. Foundation modellen maken generalisatie mogelijk. De stukken convergeren.

Netradyne's Visie: Leiden van het Tijdperk van Gegeneraliseerde Edge Intelligence

Netradyne is uniek gepositioneerd om deze transitie te leiden.

Jaren van ingezette Physical AI, diepe edge-infrastructuur, en real-world data op een ongekende schaal bieden het fundament. Miljoenen camera's ingezet. Continu leren aan de edge. Iteratieve verbetering van miljarden kilometers.

Wat hierna komt is niet een enkele functie—het is een nieuwe klasse van intelligentie aan de edge. Eentje die de fysieke wereld continu begrijpt, zich aanpast naarmate omgevingen veranderen, en veiligere, meer efficiënte operaties overal mogelijk maakt.

Bij Netradyne is Gegeneraliseerde Edge Intelligence geen aspiratie. Het is de natuurlijke evolutie van Physical AI die al opereert in de echte wereld—elke kilometer, elk moment, elke dag.

Voor meer informatie over Netradyne's Physical AI platform, Driver•i, en Video LiveSearch mogelijkheden, bezoek netradyne.com